Here at HCC, we have a few VM-based projects going. One is the Condor-based VM launching that Ashu referenced in his previous posting. That project is to take an existing capability (Condor batch system hooked to the grid) and extending it; instead of launching processes, one can launch an entire VM.

One of our other employees, Josh, has been working from the other direction: taking a common "cloud platform", OpenStack, and seeing if it can be adopted to our high-throughput needs. The OpenStack work is in its beginning phases, but bits and pieces are starting to become functional.

Last night, I tried out install for the first time. One of the initial tasks I wanted to accomplish is to create a custom VM. A lot of the OpenStack documentation is fairly Ubuntu specific, so I've taken their pages and adopted them for installing from a CentOS 5.6 machine. Unfortunately, I didn't take any nice screen shots like Ashu did, but I hope this will be useful to others.

Long term, we plan to open OpenStack up to select OSG VOs for testing. While we are still in the "tear it down and rebuild once a week" mode, it's just been opened up to select HCC users.

So, without further ado, I present...

Creating a new Fedora image using HCC's OpenStack

These notes are based on the upstream openstack documents here:

http://docs.openstack.org/trunk/openstack-compute/admin/content/creating-a-linux-image.html

Prerequisites

It all starts with an account.

For local users, contact hcc-support to get your access credentials. They will come in a zipfile. Download the zipfile into your home directory and unpack it. Among other things, there will be a novarc file. Source this:

source novarc

This will set up environment variables in your shell pointing to your login credentials. Do not share these with other people! You will need to do this each time you open a new shell.

To create the image, you will need root access on a development machine with KVM installed. I used a CentOS 5.6 machine and did:

yum groupinstall kvm

to get the various necessary KVM packages. I als

First, create a new raw image file:

qemu-img create -f raw /tmp/server.img 5G

This will be the block device that is presented to your virtual machine; make it as large as necessary. Our current hardware is pretty space-limited: smaller is encouraged. Next, download the Fedora boot ISO:

curl http://serverbeach1.fedoraproject.org/pub/alt/bfo/bfo.iso > /tmp/bfo.iso

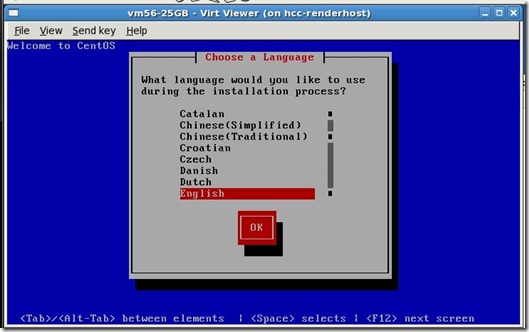

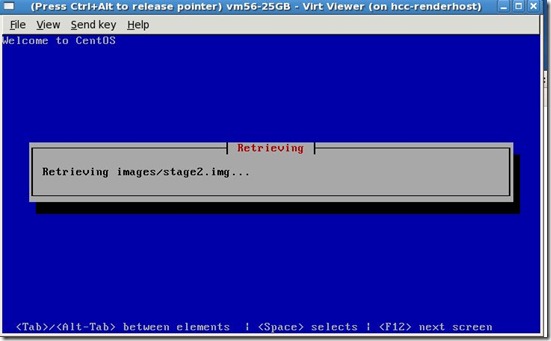

This is a small, 670KB ISO file that contains just enough information to bootstrap the Anaconda installer. Next, we'll boot it as a virtual machine on your local system.

sudo /usr/libexec/qemu-kvm -m 2048 -cdrom /tmp/bfo.iso -drive file=/tmp/server.img -boot d -net nic -net user -vnc 127.0.0.1:0 -cpu qemu64 -M rhel5.6.0 -smp 2 -daemonize

This will create a simple virtual machine (2 cores, 2GB RAM) with /tmp/server.img as a drive, and boot the machine from /tmp/bfo.iso. It will also allow you to connect to the VM via a VNC viewer.

If you are physically on the host machine, you can use a VNC viewer for screen ":0". If you are logged in remotely (I log in from my Mac), you'll want to port-forward:

ssh -L 5900:localhost:5900 username@remotemachine.example.com

From your laptop, connect to localhost:0 with a VNC viewer. Note that the most common VNC viewers on the Mac (the built-in Remote Viewer and Chicken of the VNC) don't work with KVM. I found that "JollyFastVNC" works, but costs $5 from the App Store.

Once logged in, select the version of Fedora you'd like to install, and "click next" until the installation is done. Fedora 15 is sure nice :)

Fedora will want to reboot the machine, but the reboot will fail because KVM is set to only boot from the CD. So, once it tries to reboot, kill KVM and start it again with the following arguments:

sudo /usr/libexec/qemu-kvm -m 2048 -drive file=/tmp/server.img -net nic -net user -vnc 127.0.0.1:0 -cpu qemu64 -M rhel5.6.0 -smp 2 -daemonize

Again, connect via VNC, and do any post-install customization. Start by updating and turning on SSH:

yum update yum install openssh-server chkconfig sshd on

You will need to tweak /etc/fstab to make it suitable for a cloud instance. Nova-compute may resize the disk at the time of launch of instances based on the instance type chosen. This can make the UUID of the disk invalid. Further, we will remove the LVM setup, and just have the root partition present (no swap, no /boot).

Edit /mnt/etc/fstab. Change the following three lines:

/dev/mapper/VolGroup-lv_root / ext4 defaults 1 1 UUID=0abae194-64c8-4d13-a4c0-6284d9dcd7b4 /boot ext4 defaults 1 2 /dev/mapper/VolGroup-lv_swap swap swap defaults 0 0

to just one line:

LABEL=uec-rootfs / ext4 defaults 0 0

Since, Fedora does not ship with an init script for OpenStack, we will do a nasty hack for pulling the correct SSH key at boot. Edit the /etc/rc.local file and add the following lines before the line "touch /var/lock/subsys/local":

depmod -a modprobe acpiphp # simple attempt to get the user ssh key using the meta-data service mkdir -p /root/.ssh echo >> /root/.ssh/authorized_keys curl -m 10 -s http://169.254.169.254/latest/meta-data/public-keys/0/openssh-key | grep 'ssh-rsa' >> /root/.ssh/authorized_keys echo "AUTHORIZED_KEYS:" echo "************************" cat /root/.ssh/authorized_keys echo "************************"

Once you are finished customizing, go ahead and power off:

poweroff

Converting to an acceptable OpenStack format

The image that needs to be uploaded to OpenStack needs to be an ext4 filesystem image; we currently have a raw block device image. We will extract this filesystem from running a few commands on the host machine. First, we need to find out the starting sector of the partition. Run:

fdisk -ul /tmp/server.img

You should see an output like this (the error messages are harmless):

last_lba(): I don't know how to handle files with mode 81a4 You must set cylinders. You can do this from the extra functions menu. Disk /dev/loop0: 5368 MB, 5368709120 bytes 255 heads, 63 sectors/track, 652 cylinders, total 10485760 sectors Units = sectors of 1 * 512 = 512 bytes Device Boot Start End Blocks Id System /dev/loop0p1 * 2048 1026047 512000 83 Linux Partition 1 does not end on cylinder boundary. /dev/loop0p2 1026048 10485759 4729856 8e Linux LVM Partition 2 does not end on cylinder boundary.

Note the following commands assume the units are 512 bytes. You will need the start and end number for the "Linux LVM"; in this case, it is 1026048 and 10485759.

Copy the entire partition to a new file

dd if=/tmp/server.img of=/tmp/server.lvm.img skip=1026048 count=$((10485759-1026048)) bs=512

For "skip" and "count", use the begin and end you copy/pasted from the fdisk output. Now we have our LVM image; we'll need to activate it. First, mount the LVM image on the loopback device and look for the volume group name:

[bbockelm@localhost ~]$ sudo /sbin/losetup /dev/loop0 /tmp/server.lvm.img [bbockelm@localhost ~]$ sudo /sbin/pvscan PV /dev/sdb1 VG vg_home lvm2 [7.20 TB / 0 free] PV /dev/sda2 VG vg_system lvm2 [73.88 GB / 0 free] PV /dev/loop0 VG VolGroup lvm2 [4.50 GB / 0 free] Total: 3 [1.28 TB] / in use: 3 [1.28 TB] / in no VG: 0 [0 ]

Note the third listing is for our loopback device (/dev/loop0) and a volume group named, simply, "VolGroup". We'll want to activate that:

[bbockelm@localhost ~]$ sudo /sbin/vgchange -ay VolGroup 2 logical volume(s) in volume group "VolGroup" now active

We can now see the Fedora root file system in /dev/VolGroup/lv_root. We use dd to make a copy of this disk:

sudo dd if=/dev/VolGroup/lv_root of=/tmp/serverfinal.img

I get the following output:

[bbockelm@localhost ~]$ sudo dd if=/dev/VolGroup/lv_root of=/tmp/serverfinal2.img 3145728+0 records in 3145728+0 records out 1610612736 bytes (1.6 GB) copied, 14.5444 seconds, 111 MB/s

It's time to unmount all our devices. Start by removing the LVM:

[bbockelm@localhost ~]$ sudo /sbin/vgchange -an VolGroup 0 logical volume(s) in volume group "VolGroup" now active

Then, unmount our loopback device:

[bbockelm@localhost ~]$ sudo /sbin/losetup -d /dev/loop0

We will do one last tweak: change the label on our filesystem image to "uec-rootfs":

sudo /sbin/tune2fs -L uec-rootfs /tmp/serverfinal.img

*Note* that your filesystem image is ext4; if your host is RHEL5.x (this is my case!), your version of tune2fs will not be able to complete this operation. In this case, you will need to restart your VM in KVM with the newly-extracted serverfinal.img as a second hard drive. I did the following KVM invocation:

sudo /usr/libexec/qemu-kvm -m 2048 -drive file=/tmp/server.img -net nic -net user -vnc 127.0.0.1:0 -cpu qemu64 -M rhel5.6.0 -smp 2 -daemonize -drive file=/tmp/serverfinal.img

The second drive shows up as /dev/sdb; go ahead and re-execute tune2fs from within the VM:

[root@localhost ~]# tune2fs -L uec-rootfs /dev/sdb

Extract Kernel and Initrd for OpenStack

Fedora creates a small boot partition separate from the LVM we extracted previously. We'll need to mount it, and copy out the kernel and initrd. First, mount the loopback device and map the partitions.

[bbockelm@localhost ~]$ sudo /sbin/losetup -f /tmp/server.img [bbockelm@localhost ~]$ sudo /sbin/kpartx -a /dev/loop0

The boot partition should now be available at /dev/mapper/loop0p1. Mount this:

[bbockelm@localhost ~]$ sudo mkdir /tmp/server_image/ [bbockelm@localhost ~]$ sudo mount /dev/mapper/loop0p1 /tmp/server_image/

Now, copy out the kernel and initrd:

[bbockelm@localhost ~]$ cp /tmp/server_image/vmlinuz-2.6.40.3-0.fc15.x86_64 ~ [bbockelm@localhost ~]$ cp /tmp/server_image/initramfs-2.6.40.3-0.fc15.x86_64.img ~

Unmount and unmap:

[bbockelm@localhost ~]$ sudo umount /tmp/server_image [bbockelm@localhost ~]$ sudo /sbin/kpartx -d /dev/loop0 [bbockelm@localhost ~]$ sudo /sbin/losetup -d /dev/loop0

Upload into OpenStack

We need to bundle, then upload the kernel, initrd, and finally the image. First, the kernel:

[bbockelm@localhost ~]$ euca-bundle-image -i ~/vmlinuz-2.6.40.3-0.fc15.x86_64 --kernel true

Checking image

Encrypting image

Splitting image...

Part: vmlinuz-2.6.40.3-0.fc15.x86_64.part.00

Generating manifest /tmp/vmlinuz-2.6.40.3-0.fc15.x86_64.manifest.xml

[bbockelm@localhost ~]$ euca-upload-bundle -b testbucket -m /tmp/vmlinuz-2.6.40.3-0.fc15.x86_64.manifest.xml

Checking bucket: testbucket

Uploading manifest file

Uploading part: vmlinuz-2.6.40.3-0.fc15.x86_64.part.00

Uploaded image as testbucket/vmlinuz-2.6.40.3-0.fc15.x86_64.manifest.xml

[bbockelm@localhost ~]$ euca-register testbucket/vmlinuz-2.6.40.3-0.fc15.x86_64.manifest.xml

IMAGE aki-0000000a

Write down the kernel ID; it is aki-0000000a above. Then, the initrd:

euca-bundle-image -i ~/initramfs-2.6.40.3-0.fc15.x86_64.img --ramdisk true euca-upload-bundle -b testbucket -m /tmp/initramfs-2.6.40.3-0.fc15.x86_64.img.manifest.xml euca-register testbucket/initramfs-2.6.40.3-0.fc15.x86_64.img.manifest.xml

My initrd's ID was ari-0000000b. Finally, the disk image itself

euca-bundle-image --kernel aki-0000000a --ramdisk ari-0000000b -i /tmp/serverfinal.img -r x86_64

This will save the image into /tmp and named "serverfinal.img.manifest.xml". I didn't particularly care for the name, so I changed it to "fedora-15.img.manifest.xml". Now, upload:

euca-upload-bundle -b testbucket -m /tmp/fedora-15.img.manifest.xml euca-register testbucket/serverfinal2.img.manifest.xml

Congratulations! You now have a brand-new Fedora-15 image ready to use. Fire up HybridFox and see if you were successful.